Table Of Contents

Introduction

AI at the edge is becoming increasingly important, and with devices like the NVIDIA Jetson, running sophisticated machine learning models locally is more accessible than ever.

Why Deploy AI at the Edge? What Are the Benefits of Edge AI?

Edge AI is revolutionizing how artificial intelligence is deployed and utilized across industries. Instead of relying on cloud computing, edge AI processes data locally on devices like NVIDIA

Key Benefits of Edge AI

- Low Latency & Real-Time Processing: AI at the edge enables instant data processing, critical for autonomous systems and industrial automation.

- Enhanced Privacy & Security: Local data processing minimizes cyber threats and ensures compliance with privacy laws.

- Reduced Bandwidth & Cloud Costs: Less reliance on cloud servers reduces bandwidth usage and operational costs, especially in remote areas.

- Increased Reliability: Edge AI works offline or with limited connectivity, ensuring uninterrupted performance in mission-critical applications.

- Energy Efficiency & Sustainability: Local processing consumes less energy, making it ideal for battery-powered devices like drones and smart sensors.

- Scalability & Flexibility: Edge AI supports distributed computing, enabling cost-effective, large-scale deployments across multiple locations.

What is VILA?

The model Efficient-Large-Model/VILA1.5-3b is part of NVIDIA’s VILA (Visual Language Intelligence and Applications) series, designed to integrate visual and textual data for advanced multimodal understanding. Specifically, the VILA1.5-3b model has approximately 3 billion parameters and has been trained on a vast dataset of 53 million interleaved image-text pairs. This extensive training enables the model to perform tasks such as multi-image reasoning, in-context learning, and visual chain-of-thought processing. One of the notable features of VILA1.5-3b is its optimized deployment capability. Through 4-bit quantization using the TinyChat framework, the model can be efficiently deployed on edge devices, including NVIDIA’s Jetsons.

Prerequisites

- AVerMedia Industrial Standard AI Box PC Equips NVIDIA Jetson Orin NX/Orin Nano Module D115WOXB (16G)

- JetPack 6.2 (L4T r36.x)

- Efficient-Large-Model/VILA1.5-3b model

Global architecture diagram

Getting Started: Setting Up Live Llava on Jetson

Setting up Live Llava on Jetson involves multiple steps:

- Preparing the environment: Use SDK Manager to flash the Jetson Orin After flashing, reboot the device and verify the installation:

nvcc --version

You should see the installed CUDA version, confirming a successful installation.

Change power mode in Jetson to Maxi

sudo nvpmodel -m 0

Installing the necessary packages Ensure camera is properly connected and detected by installing jetson-utils and container tools

git clone https://github.com/dusty-nv/jetson-containers

bash jetson-containers/install.sh

The nvidia-docker is configured, however not enabled by default. To enable docker to run nvidia-docker runtime as a default, so we need to add the “default-runtime”: “nvidia” to the /etc/docker/daemon.json config file so it will look like this

{ "runtimes":

{

"nvidia":

{

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

},

"default-runtime": "nvidia"

}

Run a Jetson container with the dynamically resolved tag for nano_llm

jetson-containers run $(autotag nano_llm)

Run the Nano LLaVA video query agent using Python

python3 -m nano_llm.agents.video_query --api=mlc \

--model Efficient-Large-Model/VILA1.5-3b \

--max-context-len 256 \

--max-new-tokens 32 \

--video-input /dev/video0 \

--video-output webrtc://@:8554/output

Open Chrome or Chromium “chrome://flags#enable-webrtc-hide-local-ips-with-mdns”, set it to disable and restart the browser.

The VideoQuery agent is employed to apply prompts to the incoming video feed using the selected vision-language model. After launching the agent with your camera, you can access the interface by navigating to https://<IP_ADDRESS>:8050 in your web browser.

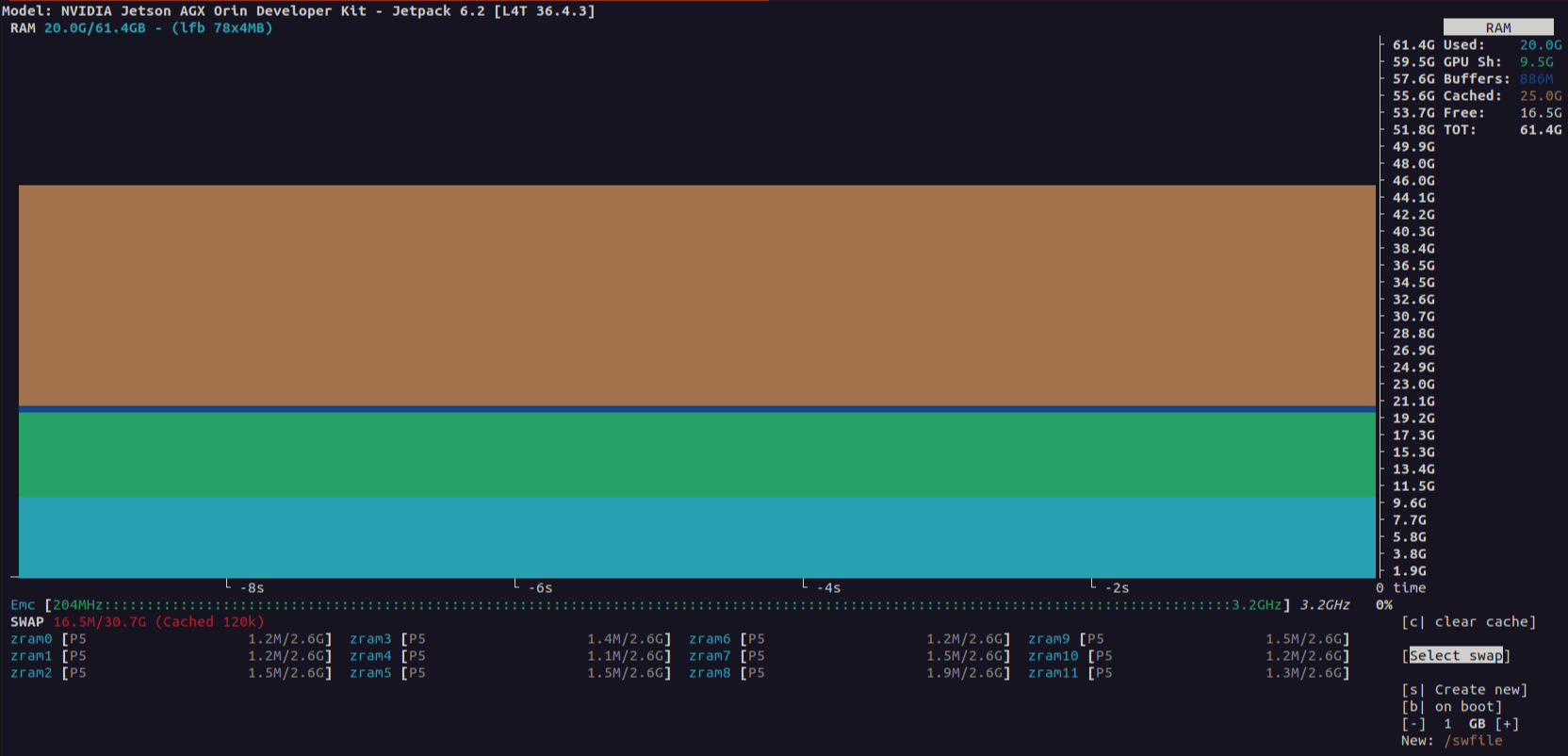

VILA model Monitoring on Avermedia with Jtop

The following screenshots demonstrate how we monitored system resource usage (CPU, GPU, memory, etc.) while running the ViLA model on an NVIDIA Jetson device using the jtop command.

First we need to install jetson-stats.

sudo -H pip install -U jetson-stats

and start jtop with:

jtop

{ }

{ }

{ }

{ }

{ }

{ }

Conclusion

Exploring Jetson AI Lab has been an insightful journey into real-time, multimodal AI processing. From setting up the environment to experimenting with vision-language models, this tutorial showcases the potential of edge AI in handling real-world applications. The ability to process both visual and textual inputs in real time opens doors for various use cases, from assistive AI applications to autonomous systems. As AI hardware continues to evolve, platforms like Jetson make it increasingly accessible for developers and researchers to push the boundaries of interactive AI.

🚀 Have you tried deploying AI on Jetson? Share your experience in the comments!